Databases

STAR DATABASE INFORMATION PAGES

Frequently Asked Questions

Frequently Asked Questions

Q: I am completely new to databases, what should I do first?

A: Please, read this FAQ list, and database API documentation :

Database documentation

Then, please read How to request new db tables

Don't forget to log in, most of the information is STAR-specific and is protected; If our documentation pages are missing some information (that's possible), please as questions at db-devel-maillist.

Q: I think, I've encountered database-related bug, how can I report it?

A: Please report it using STAR RT system (create ticket), or send your observations to db-devel maillist. Don't hesitate to send ANY db-related questions to db-devel maillist, please!

Q: I am subsystem manager, and I have questions about possible database structure for my subsystem. Whom should I talk to discuss this?

A: Dmitry Arkhipkin is current STAR database administrator. You can contact him via email, phone, or just stop by his office at BNL:

Phone: (631)-344-4922

Email: arkhipkin@bnl.gov

Office: 1-182

Q: why do I need API at all, if I can access database directly?

A: There are a few moments to consider :

a) we need consistent data set conversion from storage format to C++ and Fortran;

b) our data formats change with time, we add new structures, modify old structures;

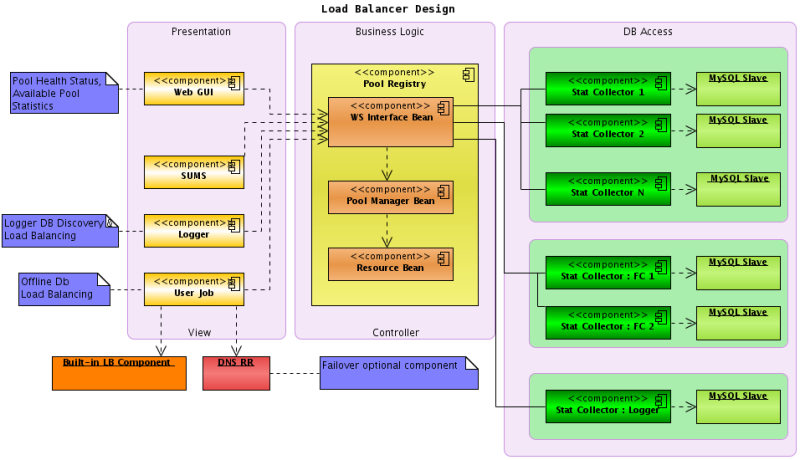

b) direct queries are less efficient than API calls: no caching,no load balancing;

c) direct queries mean more copy-paste code, which generally means more human errors;

We need API to enable: schema evolution, data conversion, caching, load balancing.

Q: Why do we need all those databases?

A: STAR has lots of data, and it's volume is growing rapidly. To operate efficiently, we must use proven solution, suitable for large data warehousing projects – that's why we have such setup, there's simply no subpart we can ignore safely (without overall performance penalty).

Q: It is so complex and hard to use, I'd stay with plain text files...

A: We have clean, well-defined API for both Offline and FileCatalog databases, so you don't have to worry about internal db activity. Most db usage examples are only a few lines long, so really, it is easy to use. Documentation directory (Drupal) is being improved constantly.

Q: I need to insert some data to database, how can I get write access enabled?

A: Please send an email with your rcas login and desired database domain (e.g. "Calibrations/emc/[tablename]") to arkhipkin@bnl.gov (or current database administrator). Write access is not for everyone, though - make sure that you are either subsystem coordinator, or have proper permission for such data upload.

Q: How can I read some data from database? I need simple code example!

A: Please read this page : DB read example

Q: How can I write something to database? I need simple code example!

A: Please read this page : DB write example

Q: I'm trying to set '001122' timestamp, but I cannot get records from db, what's wrong?

A: In C++, numbers starting with '0' are octals, so 001122 is really translated to 594! So, if you need to use '001122' timestamp (any timestamp with leading zeros), it should be written as simply '1122', omitting all leading zeros.

Q: What time zone is used for a database timestamps? I see EDT and GMT being used in RunLog...

A: All STAR databases are using GMT timestamps, or UNIX time (seconds since epoch, no timezone). If you need to specify a date/time for db request, please use GMT timestamp.

Q: It is said that we need to document our subsystem's tables. I don't have privilege to create new pages (or, our group has another person responsible for Drupal pages), what should I do?

A: Please create blog page with documentation - every STAR user has this ability by default. It is possible to add blog page to subsystem documentation pages later (webmaster can do that).

Q: Which file(s) is used by Load Balancer to locate databases, and what is the order of precedence for those files (if many available)?

A: Files being searched by LB are :

1. $DB_SERVER_LOCAL_CONFIG env var, should point to new LB version schema xml file (set by default);

2. $DB_SERVER_GLOBAL_CONFIG env. var, should point to new LB version schema xml file (not set by default);

3. $STAR/StDb/servers/dbLoadBalancerGlobalConfig.xml : fallback for LB, new schema expected;

if no usable LB configurations found yet, following files are being used :

1. $STDB_SERVERS/dbServers.xml - old schema expected;

2. $HOME/dbServers.xml - old schema expected;

3. $STAR/StDb/servers/dbServers.xml - old schema expected;

DB access: read data

// this will be in chain

St_db_Maker *dbMk=new St_db_Maker("db", "MySQL:StarDb", "$STAR/StarDb");

dbMk->SetDebug();

dbMk->SetDateTime(20090301,0); // event or run start time

dbMk->SetFlavor("ofl"); // pick up offline calibrations

// dbMk->SetFlavor("simu"); // use simulations calibration set

dbMk->Init();

dbMk->Make();

// this is done inside ::Make method

TDataSet *DB = 0;

// "dbMk->" will NOT be needed, if done inside your [subsys]DbMaker.

// Simply use DB = GetInputDb("Calibrations/[subsystem_name]")

DB = dbMk->GetInputDB("Calibrations/[subsystem_name]");

if (!DB) { std::cout << "ERROR: no db maker?" << std::endl; }

St_[subsystem_name][table_name] *[table_name] = 0;

[table_name] = (St_[subsystem_name][table_name]*) DB->Find("[subsystem_name][table_name]");

// fetch data and place it to appropriate structure

if ([table_name]) {

[subsystem_name][table_name]_st *table = [table_name]->GetTable();

std::cout << table->param1 << std::endl;

std::cout << table->paramN << std::endl;

}

DB access: write data

// Initialize db manager

StDbManager* mgr = StDbManager::Instance();

StDbConfigNode* node = mgr->initConfig("Calibrations_[subsystem_name]");

StDbTable* table = node->addDbTable("[subsystem_name][table_name]");

TString storeTime = "2009-01-02 00:00:00"; // calibration timestamp

mgr->setStoreTime(storeTime.Data());

// create your c-struct and fill it with data

[subsystem_name][table_name]_st [tablename];

[table_name].param1 = [some_value];

[table_name].paramN = [some_other_value];

// store data in the table

table->SetTable((char*)&[table_name]);

// store table in dBase

mgr->storeDbTable(table);

Timestamps

TIMESTAMPS

There are three timestamps used in STAR databases;

| beginTime | This is STAR user timestamp and it defines ia validity range |

| entryTime | insertion into the database |

| deactive | either a 0 or a UNIX timestamp - used for turning off a row of data |

EntryTime and deactive are essential for 'reproducibility' and 'stability' in production.

The beginTime is the STAR user timestamp. One manifistation of this, is the time recorded by daq at the beginning of a run. It is valid until the the beginning of the next run. So, the end of validity is the next beginTime. In this example it the time range will contain many event times which are also defined by the daq system.

The beginTime can also be use in calibration/geometry to define a range of valid values.

EXAMPLE: (et = entryTime) The beginTime represents a 'running' timeline that marks changes in db records w/r to daq's event timestamp. In this example, say at some time, et1, I put in an initial record in the db with daqtime=bt1. This data will now be used for all daqTimes later than bt1. Now, I add a second record at et2 (time I write to the db) with beginTime=bt2 > bt1. At this point the 1st record is valid from bt1 to bt2 and the second is valid for bt2 to infinity. Now I add a 3rd record on et3 with bt3 < bt1 so that

1st valid bt1-to-bt2, 2nd valid bt2-to-infinity, 3rd is valid bt3-to-bt1.

Let's say that after we put in the 1st record but before we put in the second one, Lydia runs a tagged production that we'll want to 'use' forever. Later I want to reproduce some of this production (e.g. embedding...) but the database has changed (we've added 2nd and 3rd entries). I need to view the db as it existed prior to et2. To do this, whenever we run production, we defined a productionTimestamp at that production time, pt1 (which is in this example < et2). pt1 is passed to the StDbLib code and the code requests only data that was entered before pt1. This is how production in 'reproducible'.

The mechanism also provides 'stability'. Suppose at time et2 the production was still running. Use of pt1 is a barrier to the production from 'seeing' the later db entries.

Now let's assume that the 1st production is over, we have all 3 entries, and we want to run a new production. However, we decide that the 1st entry is no good and the 3rd entry should be used instead. We could delete the 1st entry so that 3rd entry is valid from bt3-to-bt2 but then we could not reproduce the original production. So what we do is 'deactivate' the 1st entry with a timestamp, d1. And run the new production at pt2 > d1. The sql is written so that the 1st entry is ignored as long as pt2 > d1. But I can still run a production with pt1 < d1 which means the 1st entry was valid at time pt1, so it IS used.

One word of caution, you should not deactivate data without training!

email your request to the database expert.

In essence the API will request data as following:

'entryTime <productionTime<deactive || entryTime< productionTime & deactive==0.'

To put this to use with the BFC a user must use the dbv switch. For example, a chain that includes dbv20020802 will return values from the database as if today were August 2, 2002. In other words, the switch provides a user with a snapshot of the database from the requested time (which of coarse includes valid values older than that time). This ensures the reproducability of production.

If you do not specify this tag (or directly pass a prodTime to StDbLib) then you'll get the latest (non-deactivated) DB records.

Below is an example of the actual queries executed by the API:

select beginTime + 0 as mendDateTime, unix_timestamp(beginTime) as mendTime from eemcDbADCconf Where nodeID=16 AND flavor In('ofl') AND (deactive=0 OR deactive > =1068768000) AND unix_timestamp(entryTime) < =1068768000 AND beginTime > from_unixtime(1054276488) And elementID In(1) Order by beginTime limit 1

select unix_timestamp(beginTime) as bTime,eemcDbADCconf.* from eemcDbADCconf Where nodeID=16 AND flavor In('ofl') AND (deactive=0 OR deactive>=1068768000) AND unix_timestamp(entryTime) < =1068768000 AND beginTime < =from_unixtime(1054276488) AND elementID In(1) Order by beginTime desc limit 1

How-To: user section

Useful database tips and tricks, which could be useful for STAR activities, are stored in this section.

New database tables, complete guide

How-To: request new DB tables

-

First of all, you need to define all required variables you want to store in database, and their compositions. This means, you will have one or more C++ structures, stored as one or more tables in STAR Offline database. Typically, this is stored in either Calibrations or Geometry db domains. Please describe briefly the following: a) how those variables are supposed to be used in offline code (3-5 lines is enough), b) how many entries would be stored in database (e.g. once per run, every 4 hours, once per day * 100 days, etc);

-

Second, you need an IDL file, describing your C/C++ structure(s). This IDL file will be automatically compiled/converted into C++ header and Fortran structure descriptor – so STAR Framework will support your data at all stages automatically. NOTE: comment line length should be less than 80 characters due to STIC limitation.

EXAMPLE of IDL file:

fooGain.idl :

--------------------------------------------------------------------------------------------------------------

/* fooGain.idl*

* Table: fooGain

*

* description: // foo detector base gain

*

*/

struct fooGain {

octet fooDetectorId; /* DetectorId, 1-32 */

unsigned short fooChannel; /* Channel, 1-578*/

float fooGain; /* Gain GeV/channel */

};

--------------------------------------------------------------------------------------------------------------

Type comparison reference table :

-

IDL

C++

short, 16 bit signed integer

short

unsigned short, 16 bit unsigned integer

unsigned short

long, 32 bit signed integer

int

unsigned long, 32 bit unsigned integer

unsigned int

float, 32 bit IEEE float

float

double, 64 bit IEEE double

double

char, 8 bit ISO latin-1

char

octet, 8 bit byte (0x00 to 0xFF)

unsigned char

Generally, you should try to use types with smallest size, which fits your data. For example, if you expect your detector ids to go from 1 to 64, please use “octet” type, which is translated to “unsigned char” (0-255), not integer (0 - 65k).

-

There are two types of tables supported by STAR DB schema: non-indexed table and indexed table. If you are able to fit your data into one record, and you expect it to change completely (all members), than you need non-indexed table. If you know that you'll have, say, 16 rows corresponding to 16 subdetector ids, and only a few of those will change at a time, than you need indexed table. Please write down your index name, if needed (e.g. detectorID) and its range (e.g. 1-16) - if there's no index needed, just write "non-indexed".

-

Calculate average size of your structure, per record. You will need this to understand total disk space/memory requirements – for optimal performance on both database and C++ side, we want our tables to be as small as possible. Bloated tables are hard to retrieve from database, and might consume too much RAM on working node during BFC chain run.

EXAMPLE :In a step 1 we created fooGain.idl file. Now, we need to convert it into header file, so we can check structure size. Let's do that (at any rcas node) :

shell> mkdir TEST; cd TEST; # create temporary working directory

shell> mkdir -p StDb/idl; # create required directory structure

shell> cp fooGain.idl StDb/idl/; # copy .idl file to proper directoryshell> cons; # compile .idl file

After that, you should be able to include .sl44_gcc346/include/fooGain.h into your test program, so you can call sizeof(fooGain_st) to determine structure size, per entry. We need to write down this number too. Ultimately, you should multiply this size by expected number of entries from step 1 – generally, this should fit into 1 Gb limit (if not – it needs to be discussed in detail).

-

Decide on who is going to insert data into those tables, write down her/his rcf login (required to enable db “write” access permissions). Usually, subsystem coordinator is a best choice for this task.

-

Create Drupal page (could be your blog, or subsystem page) with the information from steps 1-5, and send a link to it to current database administrator or db-devel maillist. Please allow one day for table creation and propagation of committed .idl files to .dev version of STAR software. Done! If you need to know how to read or write your new tables, please read Frequently Asked Questions page, it has references to read/write db examples Frequently Asked Questions.

Time Stamps

| STAR Computing | Tutorials main page |

| STAR Databases: TIMESTAMP

|

|

| Offline computing tutorial | |

TIMESTAMPS

|

There are three timestamps used in STAR databases;

EntryTime and deactive are essential for 'reproducibility' and 'stability' in production. The beginTime is the STAR user timestamp. One manifistation of this, is the time recorded by daq at the beginning of a run. It is valid unti l the the beginning of the next run. So, the end of validity is the next beginTime. In this example it the time range will contain many eve nt times which are also defined by the daq system. The beginTime can also be use in calibration/geometry to define a range of valid values. EXAMPLE: (et = entryTime) The beginTime represents a 'running' timeline that marks changes in db records w/r to daq's event timestamp. In this example, say at some tim e, et1, I put in an initial record in the db with daqtime=bt1. This data will now be used for all daqTimes later than bt1. Now, I add a second record at et2 (time I write to the db) with beginTime=bt2 > bt1. At this point the 1st record is valid from bt1 to bt2 and the second is valid for bt2 to infinity. Now I add a 3rd record on et3 with bt3 < bt1 so that

Let's say that after we put in the 1st record but before we put in the second one, Lydia runs a tagged production that we'll want to 'use' fo rever. Later I want to reproduce some of this production (e.g. embedding...) but the database has changed (we've added 2nd and 3rd entries). I need to view the db as it existed prior to et2. To do this, whenever we run production, we defined a productionTimestamp at that production time, pt1 (which is in this example < et2). pt1 is passed to the StDbLib code and the code requests only data that was entered before pt1. This is how production in 'reproducible'. The mechanism also provides 'stability'. Suppose at time et2 the production was still running. Use of pt1 is a barrier to the production from 'seeing' the later db entries. Now let's assume that the 1st production is over, we have all 3 entries, and we want to run a new production. However, we decide that the 1st entry is no good and the 3rd entry should be used instead. We could delete the 1st entry so that 3rd entry is valid from bt3-to-bt2 but the n we could not reproduce the original production. So what we do is 'deactivate' the 1st entry with a timestamp, d1. And run the new production at pt2 > d1. The sql is written so that the 1st entry is ignored as long as pt2 > d1. But I can still run a production with pt1 < d1 which means the 1st entry was valid at time pt1, so it IS used. email your request to the database expert.

In essence the API will request data as following: 'entryTime <productionTime<deactive || entryTime< productionTime & deactive==0.' To put this to use with the BFC a user must use the dbv switch. For example, a chain that includes dbv20020802 will return values from the database as if today were August 2, 2002. In other words, the switch provides a user with a snapshot of the database from the requested time (which of coarse includes valid values older than that time). This ensures the reproducability of production.

Below is an example of the actual queries executed by the API:

select unix_timestamp(beginTime) as bTime,eemcDbADCconf.* from eemcDbADCconf Where nodeID=16 AND flavor In('ofl') AND (deactive=0 OR deactive>=1068768000) AND unix_timestamp(entryTime) < =1068768000 AND beginTime < =from_unixtime(1054276488) AND elementID In(1) Order by beginTime desc limit 1

For a description of format see ....

|

How-To: admin section

This section contains various pages explaining how to do typical STAR DB administrative tasks. Warning: this section and recipies are NOT for users, but for administrator - following this recipies may be harmful to STAR, if improperly used.

Basic Database Storage Structure

Introduction

To ease maintainence of databases, a common storage structure is used where applicable. Such a structure has been devised for such database types as Conditions, Configurations, Calibrations, and Geometry. Other database types (e.g. RunLog & Production Database) require additional low level structures specific to the access requirements for the data they contain. These shall be documented independently as they are developed. The base table structures are discussed according to their function. Within each sub-section, the real table names are written with purple leters.

- Schema Definition

- Named References

- Index

- Data Storage

- Configuration (ensemble links) *

- Catalog of Configurations *

Schema DefinitionThe data objects stored in DB are pre-defined by c-structs in header files (located in $STAR/StDb/include directories). The database is fed the information about these c-structs which it stores in a pair of tables used to re-construct the c-struct definitions used for storing the data. This information can also be used to provide the memory allocation for the tables returned in the C++ API, but this can also be over-ridden to request any c-struct type allowed by the request. That is, as long as the table requested contained (at some time) items in the user-input c-struct description, the data will be return in the format requested. To perform such operations, the two DB tables used are,

|

|

| The schema table holds the names of all elements associated with a c-struct, thier relative order in the c-struct, and in which schema-versions each element exists. The table contains the fields, |

|

| *** Will replace "position" field and add information for total binary store of structure but requires that the schema-evolution model be modified. This will be for next interation of database storage structure |

Named ReferencesThere are c-structs which are used for more than one purpose. For example, Daq's detector structure is common to all types of detectors but referenced by different names when a detector specific set of data is requested or stored. Thus a request for a structure is actually a request for a named reference which in many cases is simply the name of the structure but can be different. This information is kept in the namedRef table which contains; |

|

IndexData is accessed from the database via reference contained in an index table called dataIndex. The purpose of this table is to provide access to specific rows of specific storage tables per the request information of name, timestamp, and version . Additional requests for specific element identifiers are also allowed (e.g. Sector01 of the tpc)via this index. The table contains the following fields; |

|

Data StorageThere are 2 general type of storage tables used in the mysql database. The first type of storage makes use of MySQL basic storage types to store the data as specified by the schema of the c-struct. That is, each element of the c-struct is given a column in which values are recorded and can subsequently be queried against. The name of the table is the same as the name of the structure obtained from the structure table and referenced directly from the namedRef table. Within this structure, large arrays can be handled using binary storage (within mysql) by defining the storage type as being binary. Each row of each storage table contains a unique row id that is used by the dataIndex table. example: The tpcElectronics table contains the following fields; |

|

| An additional storage table is used for those table which are more easily stored in purely binary form. This is different then the note above where some "columns" of a c-struct are large arrays and can be independently (column-specific) stored in binary form. What is meant by purely binary form is that the entire c-struct (&/or many rows) is stored as a single binary blob of data. This is (to be) handled via the bytes table. The rows in the bytes table are still pointed to via the dataIndex table.

The content of the stored data is extracted using the information about how it was stored from the namedRef, schema, & structure tables. The bytes table contains the following fields; |

|

ConfigurationsThe term Configurations within the Star Databases refers to ensembles of data that are linked together in a common "named" structure. The extent of a Configuration may span many Star Databases (i.e. a super-configuration) to encompass a entire production configuration but is also complete at each database. For example, there is a data ensemble for the Calibrations_tpc database that can be requested explicity but that can also be linked together with a Calibrations_svt, Calibrations_emc, ... configurations to form a Calibrations configuration. The data ensembles are organized by a pair of tables found in each database. These tables are

The Nodes table contains the following fields; |

|

| The NodeRelation table contains the following fields; |

|

CatalogThe Catalog structure in the database is used to organized different configurations into understandable groupings. This is much like a folder or directory system of configurations. Though very similar to the configuration database structure it is different in use. That is, the Nodes and NodeRelation tables de-reference configurations as a unique data ensemble per selection. The Catalog is used to de-references many similar configurations into groupings associated with tasks. The Catalog table structure has only seen limited use in Online databases and is still under development. Currently there 2 tables associated with the catalog,

The Nodes table contains the following fields; |

|

| The NodeRelation table contains the following fields; |

|

commentThe Offline production chain does not use the Configurations & Catalog structures provided by the database. Rather an independent configuration is stored and retrieved via an offline table, tables_hierarchy, that is the kept within the St_db_Maker. This table contains the fields; |

|

This table is currently stored as a binary blob in the

params

database.

Creating Offline Tables

HOW TO ADD NEW TABLE TO DATABASE:

create a working directory.

$> cvs co StDb/idl (overkill - but so what) $> cp StDb/idl/svtHybridDriftVelocity.idl . $> mkdir include && mkdir xml $> cp svtHybridDriftVelocity.idl include/ $> mv include/svtHybridDriftVelocity.idl include/svtHybridDriftVelocity.h $> cp -r ~deph/updates/pmd/070702/scripts/ . # this directory is in cvs $> vim include/svtHybridDriftVelocity.h

### MAKE SURE your .idl file comments are less than 80 characters due to STIC limitation. "/* " and " */" also counted, so real comment line should be less than 74 chars.

### Check and change datatypes - octets become unsigned char and long becomes int, see table for mapping details:

| IDL | C++ | MySQL |

| short, 16 bit signed integer | short, 2 bytes | SMALLINT (-32768...32768) |

| unsigned short, 16 bit unsigned integer | unsigned short, 2bytes | SMALLINT UNSIGNED (0...65535) |

| long, 32 bit signed integer | int, 4 bytes | INT (-2147483648...2147483647) |

| unsigned long, 32 bit unsigned integer | unsigned int, 4 bytes | INT UNSIGNED (0...4294967295) |

| float, 32 bit IEEE float | float, 4 bytes | FLOAT |

| double, 64 bit IEEE double | double, 8 bytes | DOUBLE |

| char, 8 bit ISO latin-1 | char, 1 byte | CHAR |

| octet, 8 bit byte (0x00 to 0xFF) | unsigned char, 1 byte | TINYINT UNSIGNED (0...255) |

NOW execute a bunch of scripts (italics are output) ( all scripts provide HELP when nothing is passed) .....

1) ./scripts/dbTableXml.pl -f include/svtHybridDriftVelocity.h -d Calibrations_svt

inputfile= include/svtHybridDriftVelocity.h

input database =Calibrations_svt

******************************

*

* Running dbTableXML.pl

*

outputfile = xml/svtHybridDriftVelocity.xml

******************************

2) ./scripts/dbDefTable.pl -f xml/svtHybridDriftVelocity.xml -s robinson.star.bnl.gov -c

#####output will end with create statement####

3) ./scripts/dbGetNode.pl -s robinson.star.bnl.gov -d Calibrations_svt

###retuns a file called svtNodes.xml

4) vim svtNodes.xml

###add this line <dbNode> svtHybridDriftVelocity <StDbTable> svtHybridDriftVelocity </StDbTable> </dbNode>

### this defines the node in the Nodes table

5) ./scripts/dbDefNode.pl -s robinson.star.bnl.gov -f svtNodes.xml

###garbage output here....

###now do the same for NodeRelations table

6) ./scripts/dbGetConfig.pl -s robinson.star.bnl.gov -d Calibrations_svt

###might retrun a couple of different configurations (svt definatetely does)

###we're interested in

7) vim Calibrations_svt_reconV0_Config.xml

###add this line <dbNode> svtHybridDriftVelocity <StDbTable> svtHybridDriftVelocity </StDbTable> </dbNode>

8) /scripts/dbDefConfig.pl -s robinson.star.bnl.gov -f Calibrations_svt_reconV0_Config.xml

IF YOU NEED TO UPDATE TABLE, WHICH NAME IS DIFFERENT FROM C++ STRUCTURE NAME:

$> ./scripts/dbDefTable.pl -f xml/

<table_xml>

.xml -s <dbserver>.star.bnl.gov -n

<table_name>

;

</table_name>

</dbserver>

</table_xml>How to check if table name does not equal to c++ struct name :

mysql prompt> select * from Nodes where structName = 'c++ struct name'

This will output the "name", which is real db table name, and "structName", which is the c++ struct name.

IF YOU NEED TO CREATE A NEW PROTO-STRUCTURE (no storage):

./scripts/dbDefTable.pl -f xml/<structure>.xml -s robinson.star.bnl.gov -e

IF YOU NEED TO CREATE A NEW TABLE BASED ON THE EXISTING STRUCTURE:

checkout proto-structure IDL file, convert to .h, then to .xml, then do:

$> ./scripts/dbDefTable.pl -f xml/<structure>.xml -s .star.bnl.gov -s <table-name><br />

then add mapping entry to the tableCatalog (manually)IMPORTANT: WHEN NEW TPC TABLE IS CREATED, THERE SHOULD BE A NEW LOG TRIGGER DEFINED!

please check tmp.dmitry/triggers directory at robinson for details..

-Dmitry

Deactivating records

A full description of the deactivation feild can be found here

http://drupal.star.bnl.gov/STAR/comp/db/time-stamps

Briefly, it is the field that removes a record from a particular query based on time. This allows for records that are found to be erroneous, to remain available to older libraries that may have used the values.

The deactive field in the database is an integer, that contains a UNIX Time Stamp.

IMPORTANT - entryTime is a timestamp which will get changed with an update - this needs to remain the same...so set entryTime = entryTime.

To deactivate a record:

1) You must have write privileges on robinson

2) There is no STAR GUI or C++ interface for this operation so it must be done from either the MySQL command line or one of the many MySQL GUIs e.g., PHPAdmin, etc.

3) update <<TABLE>> set entryTime = entryTime, deactive = UNIX_TIMESTAMP(now()) where <<condition e.g., beginTIme between "nnnn" and "nnnn">>

4) do a SELECT to check if OK, that's it!

Element IDs

PHILOSOPHY

Element IDs are part of the OFFLINE Calibrations data primary key.

This is the mechanism that allows more the one row of data returned per time stamp (beginTime).

If the elementID is not set to 0 and is set to n, the API will return between 1 and n rows depending on how many rows have be inserted into the database.

A change INCREASING the elementID is backward compatable with a table that had element ID originally set to 0. The only thing to watch out for is a message that will read something like n rows looked for 1 return.

IMPLIMENTATION

In order for this to work three actions need to be taken:

1) Insert the rows with the element ID incrementing 1,2,3...

2) an new table called blahIDs needs to be created - the syntax is important and blah should be replaced with something descriptive.

3) in the nodes table identify the index by update in relevant node (table) and update the index feild with blah

For example the pmd SMCalib table wants to return 24 rows:

A table is created and filled (onlthing different will be the table name and the third "descriptive column" and the number of rows:

mysql> select * from pmdSmIDs;

+----+-----------+----+

| ID | elementID | SM |

+----+-----------+----+

| 1 | 1 | 1 |

| 2 | 2 | 2 |

| 3 | 3 | 3 |

| 4 | 4 | 4 |

| 5 | 5 | 5 |

| 6 | 6 | 6 |

| 7 | 7 | 7 |

| 8 | 8 | 8 |

| 9 | 9 | 9 |

| 10 | 10 | 10 |

| 11 | 11 | 11 |

| 12 | 12 | 12 |

| 13 | 13 | 13 |

| 14 | 14 | 14 |

| 15 | 15 | 15 |

| 16 | 16 | 16 |

| 17 | 17 | 17 |

| 18 | 18 | 18 |

| 19 | 19 | 19 |

| 20 | 20 | 20 |

| 21 | 21 | 21 |

| 22 | 22 | 22 |

| 23 | 23 | 23 |

| 24 | 24 | 24 |

+----+-----------+----+

24 rows in set (0.01 sec)

The the Nodes table is update to read as follows:

mysql> select * from Nodes where name = 'pmdSMCalib' \G

*************************** 1. row ***************************

name: pmdSMCalib

versionKey: default

nodeType: table

structName: pmdSMCalib

elementID: None

indexName: pmdSm

indexVal: 0

baseLine: N

isBinary: N

isIndexed: Y

ID: 6

entryTime: 2005-11-10 21:06:11

Comment:

1 row in set (0.00 sec)

+++++++++++++++++++++++++++++

note the index field reads pmdSm not the default none.

HOW-TO: elementID support

HOW-TO enable 'elementID' support for a given offline table

Let's see how elementID support could be added on example of "[bemc|bprs|bsmde|bsmdp]Map" tables.

1. Lets' try [bemc|bprs]Map table first:

1.1 Create table 'TowerIDs' (4800 channels)

CREATE TABLE TowerIDs ( ID smallint(6) NOT NULL auto_increment, elementID int(11) NOT NULL default '0', Tower int(11) NOT NULL default '0', KEY ID (ID), KEY Tower (Tower) ) ENGINE=MyISAM DEFAULT CHARSET=latin1;

1.2 Fill this table with values (spanning from 1 to [maximum number of rows]):

INSERT INTO TowerIDs VALUES (1,1,1); INSERT INTO TowerIDs VALUES (2,2,2); INSERT INTO TowerIDs VALUES (3,3,3); ... INSERT INTO TowerIDs VALUES (4800,4800,4800);

Sample bash script to generate ids

#!/bin/sh echo "USE Calibrations_emc;" for ((i=1;i<=4800;i+=1)); do echo "INSERT INTO TowerIDs VALUES ($i,$i,$i);" done for ((i=1;i<=18000;i+=1)); do echo "INSERT INTO SmdIDs VALUES ($i,$i,$i);" done

1.3 Update 'Nodes' table to make it aware of new index :

UPDATE Nodes SET Nodes.indexName = 'Tower' WHERE Nodes.structName = 'bemcMap'; UPDATE Nodes SET Nodes.indexName = 'Tower' WHERE Nodes.structName = 'bprsMap';

2. Now, smdChannelIDs (18000 channels)

1.1 Create db index table:

CREATE TABLE SmdIDs ( ID smallint(6) NOT NULL auto_increment, elementID int(11) NOT NULL default '0', Smd int(11) NOT NULL default '0', KEY ID (ID), KEY Smd (Smd)

) ENGINE=MyISAM DEFAULT CHARSET=latin1;

1.2 Fill this table with values:

INSERT INTO SmdIDs VALUES (1,1,1); INSERT INTO SmdIDs VALUES (2,2,2); INSERT INTO SmdIDs VALUES (3,3,3); ... INSERT INTO SmdIDs VALUES (18000,18000,18000);

(see helper bash script above)

1.3 Update 'Nodes' to make it aware of additional index :

UPDATE Nodes SET Nodes.indexName = 'Smd' WHERE Nodes.structName = 'bsmdeMap'; UPDATE Nodes SET Nodes.indexName = 'Smd' WHERE Nodes.structName = 'bsmdpMap';

LAST STEP: Simple root4star macro to check that everything is working fine ( courtesy of Adam Kocoloski ):

{ gROOT->Macro("LoadLogger.C");

gROOT->Macro("loadMuDst.C");

gSystem->Load("StDbBroker");

gSystem->Load("St_db_Maker");

St_db_Maker dbMk("StarDb", "MySQL:StarDb");

// dbMk.SetDebug(10); // Could be enabled to see full debug output

dbMk.SetDateTime(20300101, 0);

dbMk.Init();

dbMk.GetInputDB("Calibrations/emc/y3bemc");

dbMk.GetInputDB("Calibrations/emc/map");

}

Process for Defining New or Evolved DB Tables

Introduction

The process for defining new database tables has several steps, many are predicated on making the data available to the offline infrastructure. The steps are listed here and detailed further down. Currently, the limitations on people performing these steps are those of access protection either in writing to the database or updating the cvs repository. Such permissions are given to specific individuals responsible per domain level (e.g. Tpc, Svt,...) .

- Schema Definition in Database

- Data loading into database

- Idl definition in Offline repository

- Table (Query) list for St_db_Maker (now obsolete)

- Table (Query) support for StDbLib

Several of the steps involve running perl scripts. Each of these scripts require arguments. Supplying no arguments will generate a description of what the script will do and what arguments are needed/optional.

Schema Definition in DatabaseThe data objects stored in DB are pre-defined by c-structs in header files (located in $STAR/StDb/include directories). The database is fed the information about these c-structs which it stores for use during later read and write operations. The process is performed by a set of perl scripts which do the following; |

|

| These steps are done pointing to a specific database (and a specific MySQL server). That is, the schema used to store data in a database is kept within that database so there is no overlap between, say, a struct defined for the tpc Calibrations and that defined for the emc Calibrations.

Example of taking a new c-struct through to making it available in a Configuration (such as used in Offline).

|

Data Loading into DataBaseThe data loading into the database can take many forms. The most typical is via the C++ API and can be done via a ROOT-CINT macro once the c-struct has been compiled and exists in a shared library.

|

Idl definitions in the Offline RepositoryFor data to be accessed in the offline chain, a TTable class for the database struct must exist. The current pre-requesite for this to occur is the existance of an idl file for the structure. This is the reason that the dbMakeFiles.pl was written. Once the dbMakeFiles.pl script is run for a specific structure to produce the idl file from the database record of the schema, this file must be placed in a repository which the Offline build scripts know about in order for the appropriate TTable to be produced. This repository is currently;

For testing new tables in the database with the TTable class;

|

Table (Query) List for St_db_Maker

(Now Obsolete)To access a set of data in the offline chain, The St_db_Maker needs to know what data to request from the database. This is predetermined as the St_db_Maker's construction and Init() phases. Specifically, the St_db_Maker is constructed with "const char* maindir" argument of the form,

The structure of this list is kept only inside the St_db_Maker and stored within a binary blob inside the params database. Updates must be done only on "www.star.bnl.gov".

|

Table (Query) List from StDbLib

The C++ API was written to understand the Configuration tables structure and to provide access to an ensemble of data with a single query. For example, the configuration list is requested by version name and provides "versioning" at the individual table level. It also prepares the list with individual element identifiers so that different rows (e.g. tpc-sectors, emc-modules, ...) can be accessed independently as well as whether the table is carries a "baseLine" or "time-Indexed" attribute for which provide information about how one should reference the data instances.

Storage of a Configuration can be done via the C++ API (see StDbManager::storeConfig()) but is generally done via the perl script dbDefConfig.pl. The idea behind the configuration is to allow sets of structures to be linked together in an ensemble. Many such ensembles could be formed and identified uniquely by name. However, we currently do not use this flexibility in the Offline access model hence only 1 named ensemble exists per database. In the current implementation of the Offline access model, the St_db_Maker requests this 1 configuration from the StDbBroker which passes the request to the C++ API. This sole configurations is returned to the St_db_Maker as a array of c-structs identifying each table by name, ID, and parent association.

Set up replication slave for Offline and FC databases

Currently, STAR is able to provide nightly Offline and FC snapshots to allow easy external db mirror setup.

Generally, one will need three things :

- MySQL 5.1.73 in either binary.tgz form or rpm;

- Fresh snapshot of the desired database - Offline or FileCatalog;

- Personal UID for every external mirror/slave server;

Current STAR policy is that every institution must have a local maintainer with full access to db settings.

Here are a few steps to get external mirror working :

- Request "dbuser" account at "fc3.star.bnl.gov", via SKM ( https://www.star.bnl.gov/starkeyw/ );

- Log in to dbuser@fc3.star.bnl.gov;

- Cd to /db01/mysql-zrm/offline-daily-raw/ or /db01/mysql-zrm/filecatalog-daily-raw to get "raw" mysql snapshot version;

- Copy backup-data file to your home location as offline-snapshot.tgz and extract it to your db data directory (we use /db01/offline/data at BNL);

- Make sure your configuration file (/etc/my.cnf) is up to date, all directories point to your mysql data directory, and you have valid server UID (see example config at the bottom of page). If you are setting up new mirror - please request personal UID for your new server from STAR DB administrator, otherwise please reuse existing UID; Note: you cannot use one UID for many servers, even if you have many local mirrors - you MUST have personal UID for each server.

- If you are running Scientific Linux 6, make sure you have either /etc/init.d/mysqld or /etc/init.d/mysqld_multi scripts, and "mysqld" or "mysqld_multi" is registered as service with chkconfig.

- Start MySQL with "service mysqld start" or "service mysqld_multi start 3316". Replication should be OFF at this step (skip-slave-start option in my.cnf), because we need to update master information first.

- Log in to mysql server and run 'CHANGE MASTER TO ...' followed by 'SLAVE START' to initalize replication process.

Steps 1-8 should complete replication setup. Following checks are highly recommended :

- Make sure your mysql config has "report-host" and "report-port" directives set to your db host name and port number. This is requred for indirect monitoring.

- Make sure your server has an ability to restart in case of reboot/crash. For RPM MySQL version, there is a "mysqld" or "mysqld_multi" script (see attached files as examples), which you should register with chkconfig to enable automatic restart.

- Make sure you have local db monitoring enabled. It could be one of the following : Monit, Nagios, Mon (STAR), or some custom script that does check BOTH server availability/accessibility and IO+SQL replication threads status.

If you require direct assistance with MySQL mirror setup from STAR DB Administrator, you should allow DB admin's ssh key to be installed to root@your-db-mirror.edu.

At this moment, STAR DB Administrator is Dmitry Arkhipkin (arkhipkin@bnl.gov) - feel free to contact me about ANY problems with STAR or STAR external mirror databases, please.

Typical MySQL config for STAR OFFLINE mirror (/etc/my.cnf) :

[mysqld]<br />

user = mysql<br />

basedir = /db01/offline/mysql<br />

datadir = /db01/offline/data<br />

port = 3316<br />

socket = /tmp/mysql.3316.sock<br />

pid-file = /var/run/mysqld/mysqld.pid<br />

skip-locking<br />

log-error = /db01/offline/data/offline-slave.log.err<br />

max_allowed_packet = 16M<br />

max_connections = 4096<br />

table_cache=1024<br />

query-cache-limit = 1M<br />

query-cache-type = 1<br />

query-cache-size = 256M<br />

sort_buffer = 1M<br />

myisam_sort_buffer_size = 64M<br />

key_buffer_size = 256M<br />

thread_cache = 8<br />

thread_concurrency = 4<br />

relay-log=/db01/offline/data/offline-slave-relay-bin<br />

relay-log-index=/db01/offline/data/offline-slave-relay-bin.index<br />

relay-log-info-file=/db01/offline/data/offline-slave-relay-log.info<br />

master-info-file=/db01/offline/data/offline-slave-master.info<br />

replicate-ignore-db = mysql<br />

replicate-ignore-db = test<br />

replicate-wild-ignore-table = mysql.%<br />

read-only<br />

report-host = [your-host-name]<br />

report-port = 3316<br />

server-id = [server PID] <br />

# line below should be commented out after 'CHANGE MASTER TO ...' statement applied.<br />

skip-slave-start [mysqld_safe]<br />

log=/var/log/mysqld.log<br />

pid-file=/var/run/mysqld/mysqld.pid<br />

timezone=GMTTypical 'CHANGE MASTER TO ...' statement for OFFLINE db mirror :

CHANGE MASTER TO MASTER_HOST='robinson.star.bnl.gov', MASTER_USER='starmirror', MASTER_PASSWORD='[PASS]', MASTER_PORT=3306, MASTER_LOG_FILE='robinson-bin.00000[ZYX]', MASTER_LOG_POS=[XYZ], MASTER_CONNECT_RETRY=60; START SLAVE; SHOW SLAVE STATUS \G

You can find [PASS] in master.info, robinson-bin.00000[ZYX] and [XYZ] in relay-log.info files in offline-snapshot.tgz archive

database query for event timestamp difference calculation

Database query, used by Gene to calculate [run start - first event] time differences...

select ds.runNumber,ds.firstEventTime, (ds.firstEventTime-rd.startRunTime),(rd.endRunTime-ds.lastEventTime) from runDescriptor as rd left join daqSummary as ds on ds.runNumber=rd.runNumber where rd.endRunTime>1e9 and ds.lastEventTime>1e9 and ds.firstEventTime>1e9;

Moved to protected page, because it is not recommended to use direct online database queries for anybody but online experts.

How-To: subsystem coordinator

Recommended solutions for common subsystem coordinator's tasks reside here.

Please check pages below.

Database re-initialization

It is required to

a) initialize Offline database for each subsystem just before new Run,

..and..

b) put "closing" entry at the end of the "current" Run;

To make life easier, there is a script which takes database entry from specified time, and re-inserts it at desired time. Here it is:

void table_reupload(const char* fDbName = 0, const char* fTableName = 0, const char* fFlavorName = "ofl",

const char* fRequestTimestamp = "2011-01-01 00:00:00",

const char* fStoreTimestamp = "2012-01-01 00:00:00" ) {

// real-life example :

// fDbName = "Calibrations_tpc";

// fTableName = "tpcGas";

// fFlavorName = "ofl"; // "ofl", "simu", other..

// fRequestTimestamp = "2010-05-05 00:00:00";

// fStoreTimestamp = "2011-05-05 00:00:00";

if (!fDbName || !fTableName || !fFlavorName || !fRequestTimestamp || ! fStoreTimestamp) {

std::cerr << "ERROR: Missing initialization data, please check input parameters!\n";

return;

}

gSystem->Setenv("DB_ACCESS_MODE", "write");

// Load all required libraries

gROOT->Macro("LoadLogger.C");

gSystem->Load("St_base.so");

gSystem->Load("libStDb_Tables.so");

gSystem->Load("StDbLib.so");

// Initialize db manager

StDbManager* mgr = StDbManager::Instance();

mgr->setVerbose(true);

StDbConfigNode* node = mgr->initConfig(fDbName);

StDbTable* dbtable = node->addDbTable(fTableName);

//dbtable->setFlavor(fFlavorName);

// read data for specific timestamp

mgr->setRequestTime(fRequestTimestamp);

std::cout << "Data will be fetched as of [ " << mgr->getDateRequestTime() << " ] / "<< mgr->getUnixRequestTime() <<" \n";

mgr->fetchDbTable(dbtable);

// output results

std::cout << "READ CHECK: " << dbtable->printCstructName() << " has data: " << (dbtable->hasData() ? "yes" : "no") << " (" << dbtable->GetNRows() << " rows)" << std::endl;

if (!dbtable->hasData()) {

std::cout << "ERROR: This table has no data to reupload. Please try some other timestamp!";

return;

} else {

std::cout << "Data validity range, from [ " << dbtable->getBeginDateTime() << " - " << dbtable->getEndDateTime() << "] \n";

}

char confirm[255];

std::string test_cnf;

std::cout << "ATTENTION: please confirm that you want to reupload " << fDbName << " / " << fTableName << ", " << fRequestTimestamp << " data with " << fStoreTimestamp << " timestamp.\n Type YES to proceed: ";

std::cin.getline(confirm,256);

test_cnf = confirm;

if (test_cnf != "YES") {

std::cout << "since you've typed \"" << test_cnf << "\" and not \"YES\", data won't be reuploaded." << std::endl;

return;

}

// store data back with new timestamp

if (dbtable->hasData()) {

mgr->setStoreTime(fStoreTimestamp);

if (mgr->storeDbTable(dbtable)) {

std::cout << "SUCCESS: Data reupload complete for " << fDbName << " / " << fTableName << " [ flavor : " << fFlavorName << " ]"

<< "\n" << "Data copied FROM " << fRequestTimestamp << " TO " << fStoreTimestamp << std::endl << std::endl;

} else {

std::cerr << "ERROR: Something went wrong. Please send error message text to DB Admin!" << std::endl;

}

}

}New subsystem checklist

DB: New STAR Subsystem Checklist

1. Introduction

There are three primary database types in STAR: Online databases, Offline databases and FileCatalog. FileCatalog database is solely managed by STAR S&C, so subsystem coordinators should focus on Online and Offline databases only.

Online database is dedicated to subsystem detector metadata, collected during the Run. For example, it may contain voltages, currents, error codes, gas meter readings or something like this, recorded every 1-5 minutes during the Run. Or, it may serve as a container for derived data, like online calibration results.

Online db metadata are migrated to Offline db for further usage in STAR data production. Major difference between Online and Offline is the fact that Online db is optimized for fast writes, and Offline is optimized for fast reads to achieve reasonable production times. Important: each db type has its own format (Offline is much more constrained).

Offline database contains extracts from Online database, used strictly as accompanying metadata for STAR data production purposes. This metadata should be preprocessed for easy consumption by production Makers, thus it may not contain anything involving complex operations on every data access.

2. Online database key moments

o) Please don't plan your own database internal schema. In a long run, it won't be easy to maintain custom setup, therefore, please check possible options with STAR db administrator ASAP.

o) Please decide on what exactly you want to keep track of in Online domain. STAR DB administrator will help you with data formats and optimal ways to store and access that data, but you need to define a set of things to be stored in database. For example, if your subsystem is connected to Slow Controls system (EPICS), all you need is to pass desired channel names to db admistrator to get access to this data via various www access methods (dbPlots, RunLog etc..).

3. Offline database key moments

o) ...

o) ...

Online Databases

Online Databases

Online databases are :

onldb.starp.bnl.gov:3501 - contains 'RunLog', 'Shift Sign-up' and 'Online' databases

onldb.starp.bnl.gov:3502 - contains 'Conditions_<subsysname>' databases (online daemons)

onldb.starp.bnl.gov:3503 - contains 'RunLog_daq' database (RTS system)

db01.star.bnl.gov:3316/trigger is a special buffer db for FileCatalog migration database

Replication slaves :

onldb2.starp.bnl.gov:3501 (slave of onldb.starp.bnl.gov:3501)

onldb2.starp.bnl.gov:3502 (slave of onldb.starp.bnl.gov:3502)

onldb2.starp.bnl.gov:3503 (slave of onldb.starp.bnl.gov:3503)

.gif)

How-To Online DB Run Preparation

This page contains basic steps only, please see subpages for details on Run preparations!

I. The following paragraphs should be examined *before* the data taking starts:

--- onldb.starp.bnl.gov ---

1. DB: make sure that databases at ports 3501, 3502 and 3503 are running happily. It is useful to check that onldb2.starp, onl10.starp and onl11.starp have replication on and running.

2. COLLECTORS AND RUNLOG DAEMON: onldb.starp contains "old" versions of metadata collectors and RunLogDB daemon. Collector daemons need to be recompiled and started before the "migration" step. One should verify with "caGet <subsystem>.list" command that all EPICS variables are being transmitted and received without problems. Make sure no channels produce "cannot be connected" or "timeout" or "cannot contact IOC" warnings. If they do, please contact Slow Controls expert *before* enabling such service. Also, please keep in mind that RunLogDB daemon will process runs only if all collectors are started and collect meaningful data.

3. FASTOFFLINE: To allow FastOffline processing, please enable cron record which runs migrateDaqFileTags.new.pl script. Inspect that script and make sure that $minRun variable is pointing to some recently taken run or this script will consume extra resource from online db.

4. MONITORING: As soon as collector daemons are started, database monitoring scripts should be enabled. Please see crontabs under 'stardb' and 'staronl' accounts for details. It is recommended to verify that nfs-exported directory on dean is write-accessible.

Typical crontab for 'stardb' account would be like:

*/3 * * * * /home/stardb/check_senders.sh > /dev/null

*/3 * * * * /home/stardb/check_cdev_beam.sh > /dev/null

*/5 * * * * /home/stardb/check_rich_scaler_log.sh > /dev/null

*/5 * * * * /home/stardb/check_daemon_logs.sh > /dev/null

*/15 * * * * /home/stardb/check_missing_sc_data.sh > /dev/null

*/2 * * * * /home/stardb/check_stale_caget.sh > /dev/null

(don't forget to set email address to your own!)

Typical crontab for 'staronl' account would look like:

*/10 * * * * /home/staronl/check_update_db.sh > /dev/null

*/10 * * * * /home/staronl/check_qa_migration.sh > /dev/null

--- onl11.starp.bnl.gov ---

1. MQ: make sure that qpid service is running. This service processes MQ requests for "new" collectors and various signals (like "physics on").

2. DB: make sure that mysql database server at port 3606 is running. This database stores data for mq-based collectors ("new").

3. SERVICE DAEMONS: make sure that mq2memcached (generic service), mq2memcached-rt (signals processing) and mq2db (storage) services are running.

4. COLLECTORS: grab configuration files from cvs, and start cdev2mq and ds2mq collectors. Same common sense rule applies: please check that CDEV and EPICS do serve data on those channels first. Also, collectors may be started at onl10.starp.bnl.gov if onl11.starp is busy with something (unexpected IO stress tests, user analysis jobs, L0 monitoring scripts, etc).

--- onl13.starp.bnl.gov ---

1. MIGRATION: check crontab for 'stardb' user. Mare sure that "old" and "new" collector daemons are really running, before moving further. Verify that migration macros experience no problems by trying some simple migration script. If it breaks saying that library is not found or something - find latest stable (old) version of STAR lib and set it to .cshrc config file. If tests succeed, enable cron jobs for all macros, and verify that logs contain meaningful output (no errors, warnings etc).

--- dean.star.bnl.gov ---

1. PLOTS: Check dbPlots configuration, re-create it as a copy with incremented Run number if neccesary. Subsystem experts tend to check those plots often, so it is better to have dbPlots and mq collectors up and running a little earlier than the rest of services.

2. MONITORING:

- Replication monitor aka Mon (replication should be on for all online servers);

- "old" collection daemon monitor (should be all green, some yellow possible);

- mq-based collectors, by checking "MQ Collectors" tab at Online Control Center (should be all green);

- check IOC monitor, to make sure that no EPICS channels are stuck.

- check physics on/off monitor after the fill, to make sure that CDEV transmits data correctly. If there is no data, then cdev2mq-rt service is not running. If data does not look realistic (shifted/offset timestamps) - please contact CAD, or at least let Jamie Dunlop know about it.

- check dbPlots to see that all collectors are really serving data and there are no delays.

3. RUNLOG - now RunLog browser should display recent runs.

--- db03.star.bnl.gov ---

1. TRIGGER COUNTS check cront tab for root, it should have the following records:

40 5 * * * /root/online_db/cron/fillDaqFileTag.sh

0,10,15,20,25,30,35,40,45,50,55 * * * * /root/online_db/sum_insTrgCnt >> /root/online_db/trgCnt.log

First script copies daqFileTag table from online db to local 'trigger' database. Second script calculates trigger counts for FileCatalog (Lidia). Please make sure that both migration and trigger counting work before you enable it in the crontab. There is no monitoring to enable for this service.

--- dbbak.starp.bnl.gov ---

1. ONLINE BACKUPS: make sure that mysql-zrm is taking backups from onl10.starp.bnl.gov for all three ports. It should take raw backups daily and weekly, and logical backups once per month or so. It is generally recommended to periodically store weekly / monthly backups to HPSS, for long-term archival using /star/data07/dbbackup directory as temporary buffer space.

II. The following paragraphs should be examined *after* the data taking stops:

1. DB MERGE: Online databases from onldb.starp (all three ports) and onl11.starp (port 3606) should be merged into one. Make sure you keep mysql privilege tables from onldb.starp:3501. Do not overwrite it with 3502 or 3503 data. Add privileges allowing read-only access to mq_collector_<bla> tables from onl11.starp:3606 db.

2. DB ARCHIVE PART ONE: copy merged database to dbbak.starp.bnl.gov, and start it with incremented port number. Compress it with mysqlpack, if needed. Don't forget to add 'read-only' option to mysql config. It is generally recommended to put an extra copy to NAS archive, for fast restore if primary drive crashes.

3. DB ARCHIVE PART TWO: archive merged database, and split resulting .tgz file into chunks of ~4-5 GB each. Ship those chunks to HPSS for long-term archival using /star/data07/dbbackup as temporary(!) buffer storage space.

4. STOP MIGRATION macros at onl13.starp.bnl.gov - there is no need to run that during summer shutdown period.

5. STOP trigger count calculations at db03.star.bnl.gov for the reason above.

HOW-TO: access CDEV data (copy of CAD docs)

As of Feb 18th 2011, previously existing content of this page is removed.

If you need to know how to access RHIC or STAR data available through CDEV interface, please read official CDEV documentation here : http://www.cadops.bnl.gov/Controls/doc/usingCdev/remoteAccessCdevData.html

Documentation for CDEV access codes used in Online Data Collector system will be available soon in appropriate section of STAR database documentation.

-D.A.

HOW-TO: compile RunLogDb daemon

- Login to root@onldb.starp.bnl.gov

- Copy latest "/online/production/database/Run_[N]/" directory contents to "/online/production/database/Run_[N+1]/"

- Grep every file and replace "Run_[N]" entrances with "Run_[N+1]" to make sure daemons pick up correct log directories

- $> cd /online/production/database/Run_9/dbSenders/online/Conditions/run

- $> export DAEMON=RunLogDb

- $> make

- /online/production/database/Run_9/dbSenders/bin/RunLogDbDaemon binary should be compiled at this stage

TBC

HOW-TO: create a new Run RunLog browser instance and retire previous RunLog

1. New RunLog browser:

- Copy the contents of the "/var/www/html/RunLogRunX" to "/var/www/html/RunLogRunY", where X is the previous Run ID, and Y is the current Run ID

- Change symlink "/var/www/html/RunLog" pointing to "/var/www/html/RunLogX" to "/var/www/html/RunLogY"

- TBC

2. Retire Previous RunLog browser:

- Grep source files and replace all "onldb.starp : 3501/3503" with "dbbak.starp:340X", where X is the id of the backed online database.

- TrgFiles.php and ScaFiles.php contain reference "/RunLog/", which should be changed to "/RunLogRunX/", where X is the run ID

- TBC

3. Update /admin/navigator.php immediately after /RunLog/ rotation! New run range is required.

HOW-TO: enable Online to Offline migration

Migration macros reside on stardb@onllinux6.starp.bnl.gov .

$> cd dbcron/macros-new/

(you should see no StRoot/StDbLib here, please don't check it out from CVS either - we will use precompiled libs)

First, one should check that Load Balancer config env. variable is NOT set :

$> printenv|grep DB

DB_SERVER_LOCAL_CONFIG=

(if it says =/afs/... .xml, then it should be reset to "" in .cshrc and .login scripts)

Second, let's check that we use stable libraries (newest) :

$> printenv | grep STAR

...

STAR_LEVEL=SL08e

STAR_VERSION=SL08e

...

(SL08e is valid for 2009, NOTE: no DEV here, we don't want to be affected by changed or broken DEV libraries)

OK, initial settings look good, let's try to load Fill_Magnet.C macro (easiest to see if its working or not) :

$> root4star -b -q Fill_Magnet.C

You should see some harsh words from Load Balancer, that's exactly what we need - LB should be disabled for our macros to work. Also, there should not be any segmentation violations. Initial macro run will take some time to process all runs known to date (see RunLog browser for run numbers).

Let's check if we see the entries in database:

$> mysql -h robinson.star.bnl.gov -e "use RunLog_onl; select count(*) from starMagOnl where entryTime > '2009-01-01 00:00:00' " ;

(entryTime should be set to current date)

+----------+

| count(*) |

+----------+

| 1589 |

+----------+

Now, check the run numbers and magnet current with :

$> mysql -h robinson.star.bnl.gov -e "use RunLog_onl; select * from starMagOnl where entryTime > '2009-01-01 00:00:00' order by entryTime desc limit 5" ;

+--------+---------------------+--------+-----------+---------------------+--------+---------+----------+----------+-----------+------------+------------------+

| dataID | entryTime | nodeID | elementID | beginTime | flavor | numRows | schemaID | deactive | runNumber | time | current |

+--------+---------------------+--------+-----------+---------------------+--------+---------+----------+----------+-----------+------------+------------------+

| 66868 | 2009-02-16 10:08:13 | 10 | 0 | 2009-02-15 20:08:00 | ofl | 1 | 1 | 0 | 10046008 | 1234743486 | -4511.1000980000 |

| 66867 | 2009-02-16 10:08:13 | 10 | 0 | 2009-02-15 20:06:26 | ofl | 1 | 1 | 0 | 10046007 | 1234743486 | -4511.1000980000 |

| 66866 | 2009-02-16 10:08:12 | 10 | 0 | 2009-02-15 20:02:42 | ofl | 1 | 1 | 0 | 10046006 | 1234743486 | -4511.1000980000 |

| 66865 | 2009-02-16 10:08:12 | 10 | 0 | 2009-02-15 20:01:39 | ofl | 1 | 1 | 0 | 10046005 | 1234743486 | -4511.1000980000 |

| 66864 | 2009-02-16 10:08:12 | 10 | 0 | 2009-02-15 19:58:20 | ofl | 1 | 1 | 0 | 10046004 | 1234743486 | -4511.1000980000 |

+--------+---------------------+--------+-----------+---------------------+--------+---------+----------+----------+-----------+------------+------------------+

If you see that, you are OK to start cron jobs (see "crontab -l") !

HOW-TO: online databases, scripts and daemons

Online db enclave includes :

primary databases: onldb.starp.bnl.gov, ports : 3501|3502|3503

repl.slaves/hot backup: onldb2.starp.bnl.gov, ports : 3501|3502|3503

read-only online slaves: mq01.starp.bnl.gov, mq02.starp.bnl.gov 3501|3502|3503

trigger database: db01.star.bnl.gov, port 3316, database: trigger

Monitoring:

http://online.star.bnl.gov/Mon/

(scroll down to see online databases. db01 is monitored, it is in offline slave group)

Tasks:

1. Slow Control data collector daemons

$> ssh stardb@onldb.starp.bnl.gov;

$> cd /online/production/database/Run_11/dbSenders;

./bin/ - contains scripts for start/stop daemons

./online/Conditions/ - contains source code for daemons (e.g. ./online/Conditions/run is RunLogDb)

See crontab for monitoring scripts (protected by lockfiles)

Monitoring page :

http://online.star.bnl.gov/admin/daemons/

2. Online to Online migration

- RunLogDb daemon performs online->online migration. See previous paragraph for details (it is one of the collector daemons, because it shares the source code and build system). Monitoring: same page as for data collectors daemons;

- migrateDaqFileTags.pl perl script moves data from onldb.starp:3503/RunLog_daq/daqFileTag to onldb.starp:3501/RunLog/daqFileTag table (cron-based). This script is essential for OfflineQA, so RunLog/daqFileTag table needs to be checked to ensure proper OfflineQA status.

3. RunLog fix script

$> ssh root@db01.star.bnl.gov;

$> cd online_db;

sum_insTrgCnt is the binary to perform various activities per recorded run, and it is run as cron script (see crontab -l).

4. Trigger data migration

$> ssh root@db01.star.bnl.gov

/root/online_db/cron/fillDaqFileTag.sh <- cron script to perform copy from online trigger database to db01

BACKUP FOR TRIGGER CODE:

1. alpha.star.bnl.gov:/root/backups/db01.star.bnl.gov/root

2. bogart.star.bnl.gov:/root/backups/db01.star.bnl.gov/root

5. Online to Offline migration

$> ssh stardb@onl13.starp.bnl.gov;

$> cd dbcron/macros-new; ls;

Fill*.C macros are the online->offline migration macros. There is no need in local/modified copy of the DB API, all macros use regular STAR libraries (see tcsh init scripts for details)

Macros are cron jobs. See cron for details (crontab -l). Macros are lockfile-protected to avoid overlap/pileup of cron jobs.

Monitoring :

http://online.star.bnl.gov/admin/status/

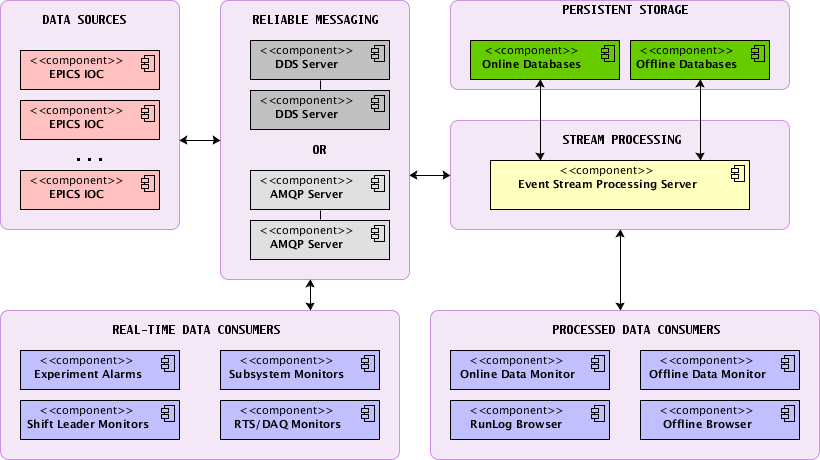

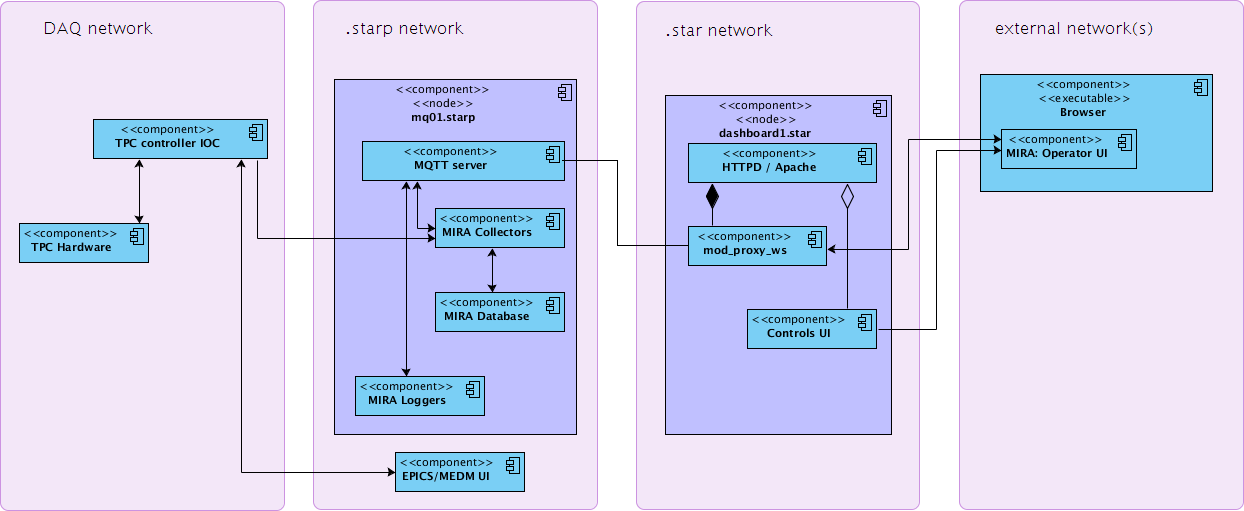

MQ-based Online API

MQ-based Online API

Intro

New Online API proposal: Message-Queue-based data exchange for STAR Online domain;

Purpose

Primary idea is to replace current DB-centric STAR Online system with industrial-strength Message Queueing service. Online databases will, then, take a proper data storage role, leaving information exchange to MQ server. STAR, as an experiment in-progress, is still growing every year, so standard information exchange protocol is required for all involved parties to enable efficient cross-communications.

It is proposed to leave EPICS system as it is now for Slow Controls part of Online domain, and allow easy data export from EPICS to MQ via specialized epics2mq services. Further, data will be stored to MySQL (or some other storage engine) via mq2db service(s). Clients could retrieve archived detector conditions either via direct MySQL access as it is now, or through properly formatted request to db2mq service.

[introduction-talk] [implementation-talk]

Primary components

- qpid: AMQP 0.10 server [src rpm] ("qpid-cpp-mrg" package, not the older "qpidc" one);

- python-qpid : python bindings to AMQP [src rpm] (0.7 version or newer);

- Google Protobuf: efficient cross-language, cross-platform serialization/deserialization library [src rpm];

- EPICS: Experimental Physics and Industrial Control System [rpm];

- log4cxx: C++ implementation of highly successful log4j library standard [src rpm];

Implementation

- epics2mq : service, which queries EPICS via EasyCA API, and submits Protobuf-encoded results to AMQP server;

- mq2db : service, which listens to AMQP storage queue for incoming Protobuf-encoded data, decodes it and stores it to MySQL (or some other backend db);

- db2mq : service, which listens to AMQP requests queue, queries backend database for data, encodes it in Protobuf format and send it to requestor;

- db2mq-client : example of client program for db2mq service (requests some info from db);

- mq-publisher-example : minimalistic example on how to publish Protobuf-encoded messages to AMQP server

- mq-subscriber-example : minimalistic example on how to subscribe to AMQP server queue, receive and decode Protobuf messages

Use-Cases

- STAR Conditions database population: epics2mq -> MQ -> mq2db; db2mq -> MQ -> db2mq-client;

- Data exchange between Online users: mq-publisher -> MQ -> mq-subscriber;

How-To: simple usage example

- login to [your_login_name]@onl11.starp.bnl.gov ;

- checkout mq-publisher-example and mq-subscriber-example from STAR CVS (see links above);

- compile both examples by typing "make" ;

- start both services, in either order. obviously, there's no dependency on publisher/subscriber start order - they are independent;

- watch log messages, read code, modify to your needs!

How-To: store EPICS channels to Online DB

- login to onl11.starp.bnl.gov;

- checkout epics2mq service from STAR CVS and compile it with "make";

- modify epics2mq-converter.ini - set proper storage path string, add desired epics channel names;

- run epics2mq-service as service: "nohup ./epics2mq-service epics2mq-converter.ini >& out.log &"

- watch new database, table and records arriving to Online DB - mq2db will automatically accept messages and create appropriate database structures!

How-To: read archived EPICS channel values from Online DB

- login to onl11.starp.bnl.gov;

- checkout db2mq-client from STAR CVS, compile with "make";

- modify db2mq-client code to your needs, or copy relevant parts into your project;

- enjoy!

MQ Monitoring

QPID server monitoring hints

To see what service is connected to our MQ server, one should use qpid-stat. Example:

$> qpid-stat -c -S cproc -I localhost:5672 Connections client-addr cproc cpid auth connected idle msgIn msgOut ======================================================================================================== 127.0.0.1:54484 db2mq-service 9729 anonymous 2d 1h 44m 1s 2d 1h 39m 52s 29 0 127.0.0.1:56594 epics2mq-servic 31245 anonymous 5d 22h 39m 51s 4m 30s 5.15k 0 127.0.0.1:58283 epics2mq-servic 30965 anonymous 5d 22h 45m 50s 30s 5.16k 0 127.0.0.1:58281 epics2mq-servic 30813 anonymous 5d 22h 49m 18s 4m 0s 5.16k 0 127.0.0.1:55579 epics2mq-servic 28919 anonymous 5d 23h 56m 25s 1m 10s 5.20k 0 130.199.60.101:34822 epics2mq-servic 19668 anonymous 2d 1h 34m 36s 10s 17.9k 0 127.0.0.1:43400 mq2db-service 28586 anonymous 6d 0h 2m 38s 10s 25.7k 0 127.0.0.1:38496 qpid-stat 28995 guest@QPID 0s 0s 108 0

MQ Routing

QPID server routing (slave mq servers) configuration

MQ routing allows to forward selected messages to remote MQ servers.

$> qpid-route -v route add onl10.starp.bnl.gov:5672 onl11.starp.bnl.gov:5672 amq.topic gov.bnl.star.#

$> qpid-route -v route add onl10.starp.bnl.gov:5672 onl11.starp.bnl.gov:5672 amq.direct gov.bnl.star.#

$> qpid-route -v route add onl10.starp.bnl.gov:5672 onl11.starp.bnl.gov:5672 qpid.management console.event.#

ORBITED automatic startup fix for RHEL5

HOW TO FIX "ORBITED DOES NOT LISTEN TO PORT XYZ" issue

/etc/init.d/orbited needs to be corrected, because --daemon option does not work for RHEL5 (orbited does not listen to desired port). Here what is needed:

Edit /etc/init.d/orbited and :

1. add

ORBITED="nohup /usr/bin/orbited > /dev/null 2>&1 &"

to the very beginning of the script, just below "lockfile=<bla>" line

2. modify "start" subroutine to use $ORBITED variable instead of --daemon switch. It should look like this :

daemon --check $prog $ORBITED

Enjoy your *working* "/sbin/service/orbited start" command ! Functionality could be verified by trying lsof -i :[your desired port], (e.g. ":9000") - it should display "orbited"

Online Recipes

- Restore RunLog Browser

- Restore Migration Code

- Restore Online Daemons

MySQL trigger for oversubscription protection

How-to enable total oversubscription check for Shift Signup (mysql trigger) :

delimiter |

CREATE TRIGGER stop_oversubscription_handler BEFORE INSERT ON Shifts

FOR EACH ROW BEGIN

SET @insert_failed := "";

SET @shifts_required := (SELECT shifts_required FROM ShiftAdmin WHERE institution_id = NEW.institution_id);

SET @shifts_exist := (SELECT COUNT(*) FROM Shifts WHERE institution_id = NEW.institution_id);

IF ( (@shifts_exist+1) >= (@shifts_required * 1.15)) THEN

SET @insert_failed := "oversubscription protection error";

SET NEW.beginTime := null;

SET NEW.endTime := null;

SET NEW.week := null;

SET NEW.shiftNumber := null;

SET NEW.shiftTypeID := null;

SET NEW.duplicate := null;

END IF;

END;

|

delimiter ;

Restore Migration Macros

The Migration Macros are monitored here, if they have stopped the page will display values in red.

- Call Database Expert 356-2257

- Log onto onlinux6.starp.bnl.gov - as stardb: Ask Michael, Wayne, Gene, Jerome for password

- check for runaway processes - for example, these are run as crons so a process starts before its previous exectution finishes, the process never ends and they build up

- If this is the case kill each of the processes

- cd to dbcron

Restore RunLog Browser

If the Run Log Browser is not Updating...

- make sure all options are selected and deselect "filter bad"

often - people will complain about missing runs and they are just not selected - Call DB EXPERT: 349-2257

- Check onldb.star.bnl.gov

- log onto node as stardb (password known by Michael, Wayne, Jerome, Gene)

- df -k ( make sure a disk did not fill) - If it did: cd into the appropriate data directory

(i.e. /mysqldata00/Run_7/port_3501/mysql/data) and copy /dev/null into the large log file onldb.starp.bnl.gov.log

`cp /dev/null > onldb.starp.bnl.gov.log` - make sure back-end runlog DAEMON is running

- execute /online/production/database/Run_7/dbSenders/bin/runWrite.sh status

Running should be returned - restart deamon - execute /online/production/database/Run_7/dbSenders/bin/runWrite.sh start

Running should be returned - if Not Running is returned there is a problem with the code or with daq

- contact DAQ expert to check their DB sender system

- refer to the next section below as to debugging/modifying recompiling code

- execute /online/production/database/Run_7/dbSenders/bin/runWrite.sh status

- Make sure Database is running

- mysql -S /tmp/mysql.3501.sock

- To restart db

- cd to /online/production/database/config

- execute `mysql5.production start 3501`

- try to connect

- debug/modify Daemon Code (be careful and log everything you do)

- check log file at /online/production/database/Run_7/dbSenders/run this may point to an obvious problem

- Source code is located in /online/production/database/Run_7/dbSenders/online/Condition/run

- GDB is not Available usless the code is recompiled NOT as a daemon

- COPY Makefile_debug to Makefile (remember to copy Makefile_good back to Makefile when finished)

- setenv DAEMON RunLogSender

- make

- executable is at /online/production/database/Run_7/dbSenders/bin

- gdb RunLog

Online Server Port Map

Below is a port/node mapping for the online databases both Current and Archival

Archival

| Run/Year | NODE | Port |

| Run 1 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3400 |

| Run 2 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3401 |

| Run 3 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3402 |

| Run 4 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3403 |

| Run 5 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3404 |

| Run 6 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3405 |

| Run 7 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3406 |

| Run 8 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3407 |

| Run 9 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3408 |

| Run 10 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3409 |

| Run 11 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3410 |

| Run 12 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3411 |

| Run 13 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3412 |

| Run 14 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3413 |

| Run 15 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3414 |

| Run 16 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3415 |

| Run 17 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3416 |

| Run 18 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3417 |

| Run 19 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3418 |

| Run 20 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3419 |

| Run 21 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3420 |

| Run 22 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3421 |

| Run 23 | dbbak.starp.bnl.gov / db04.star.bnl.gov | 3422 |

CURRENT (RUN 24)

| DATABASE | NODE | Port |

| [MASTER] Run Log, Conditions_rts, Shift Signup, Shift Log | onldb.starp.bnl.gov | 3501 |

| [MASTER] Conditions | onldb.starp.bnl.gov | 3502 |

| [MASTER] Daq Tag Tables | onldb.starp.bnl.gov | 3503 |

| [SLAVE] Run Log, Conditions_rts, Shift Signup, Shift Log | onldb2.starp.bnl.gov onldb3.starp.bnl.gov onldb4.starp.bnl.gov mq01.starp.bnl.gov mq02.starp.bnl.gov |

3501 |

| [SLAVE] Conditions | onldb2.starp.bnl.gov onldb3.starp.bnl.gov onldb4.starp.bnl.gov mq01.starp.bnl.gov mq02.starp.bnl.gov |

3502 |

| [SLAVE] Daq Tag Tables | onldb2.starp.bnl.gov onldb3.starp.bnl.gov onldb4.starp.bnl.gov mq01.starp.bnl.gov mq02.starp.bnl.gov |

3503 |

| [MASTER] MQ Conditions DB | mq01.starp.bnl.gov | 3606 |

| [SLAVE] MQ Conditions DB | mq02.starp.bnl.gov onldb2.starp.bnl.gov onldb3.starp.bnl.gov onldb4.starp.bnl.gov |

3606 |

| RTS Database (MongoDB cluster) | mongodev01.starp.bnl.gov mongodev02.starp.bnl.gov mongodev03.starp.bnl.gov |

27017 |

End of the run procedures:

1. Freeze databases (especially ShiftSignup on 3501) by creating a new db instance on the next sequential port from the archival series and copy all dbs from all three ports to this port.